pprof Memory Optimization

In the previous post we identified areas for optimization, with the memory allocation being the most obvious one.

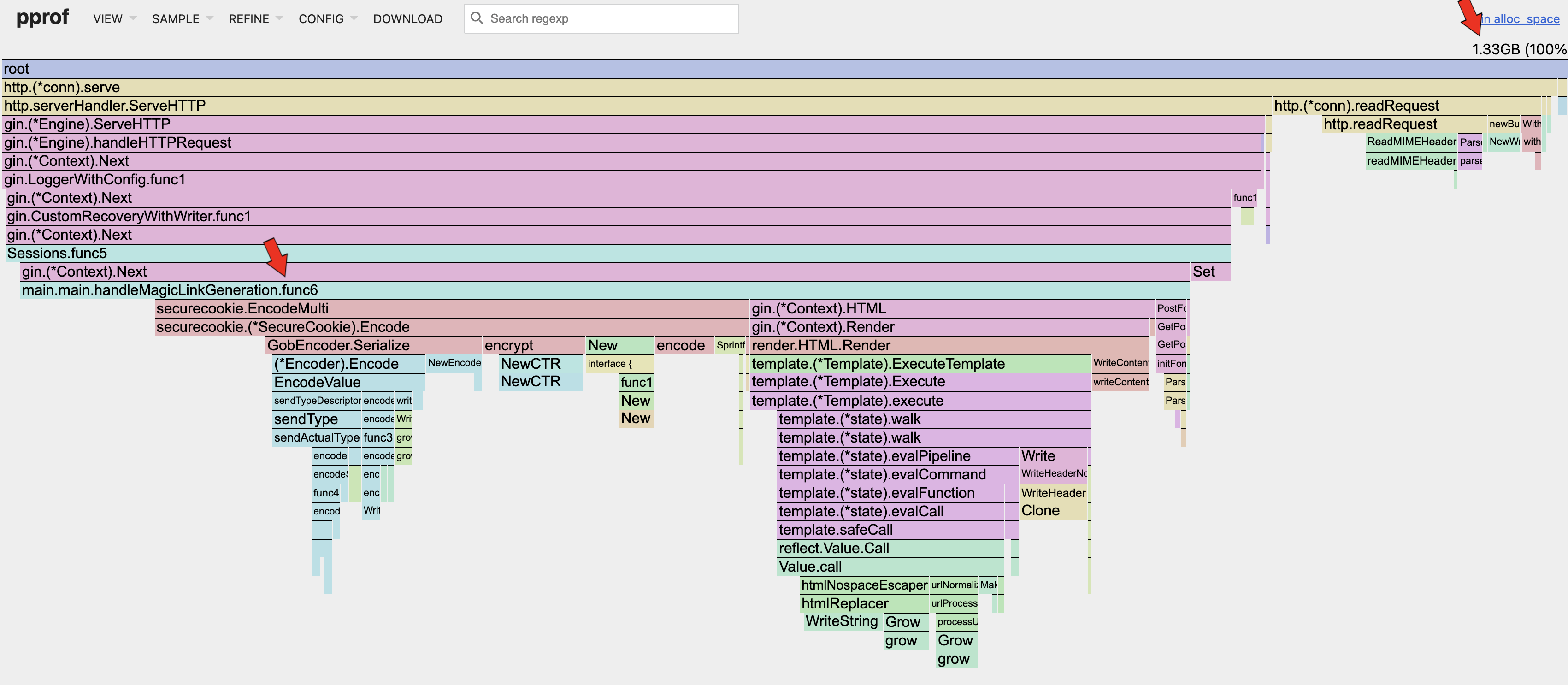

Heap Allocated Space

The Magic Link Generation handler has 2 main areas with high memory allocation: (1) Cookie Serialization; (2) HTML Generation.

Cookie Serialization

The serializer exists to convert client information, in the form of key-value pairs, into an encoded string that represents the cookie. This cookie is attached to the HTTP request.

// Serialize encodes a value using gob.

func (e GobEncoder) Serialize(src interface{}) ([]byte, error) {

buf := new(bytes.Buffer)

enc := gob.NewEncoder(buf)

if err := enc.Encode(src); err != nil {

return nil, cookieError{cause: err, typ: usageError}

}

return buf.Bytes(), nil

}Looking at the Serialize function above, each time it is called a new bytes buffer is created. This is inefficient as the buffer will be discarded after each request; we need a way to reuse the buffer across requests.

Sync Pool is a library that aims to solve this, by creating a pool of objects that can be reused without triggering the GC.

Furthermore, the GobEncoder uses reflection to infer types during runtime which takes up memory and introduces latency.

go-json is a library aimed to solve these shortcomings by using Sync Pool for buffer reuse along with other optimizations mentioned here.

import (

// ...

"bytes"

"github.com/goccy/go-json"

)

type JSONEncoder struct{}

func (e JSONEncoder) Serialize(src interface{}) ([]byte, error) {

var data interface{}

switch v := src.(type) {

case map[string]interface{}:

data = src

case map[interface{}]interface{}:

converted := make(map[string]interface{})

for k, val := range v {

converted[fmt.Sprint(k)] = val

}

data = converted

default:

data = src

}

buf := new(bytes.Buffer)

enc := json.NewEncoder(buf)

if err := enc.Encode(data); err != nil {

return nil, cookieError{cause: err, typ: usageError}

}

return buf.Bytes(), nil

}

func (e JSONEncoder) Deserialize(src []byte, dst interface{}) error {

dec := json.NewDecoder(bytes.NewReader(src))

if err := dec.Decode(dst); err != nil {

return cookieError{cause: err, typ: decodeError}

}

return nil

}

func main() {

// ...

codec := securecookie.New(authKeyMagic, encryptKeyMagic)

codec.SetSerializer(JSONEncoder{})

// ...

}Since we are using JSON encoding, we need to cast the interface{} data type to map[string]interface{}, in order for the library to serialize successfully.

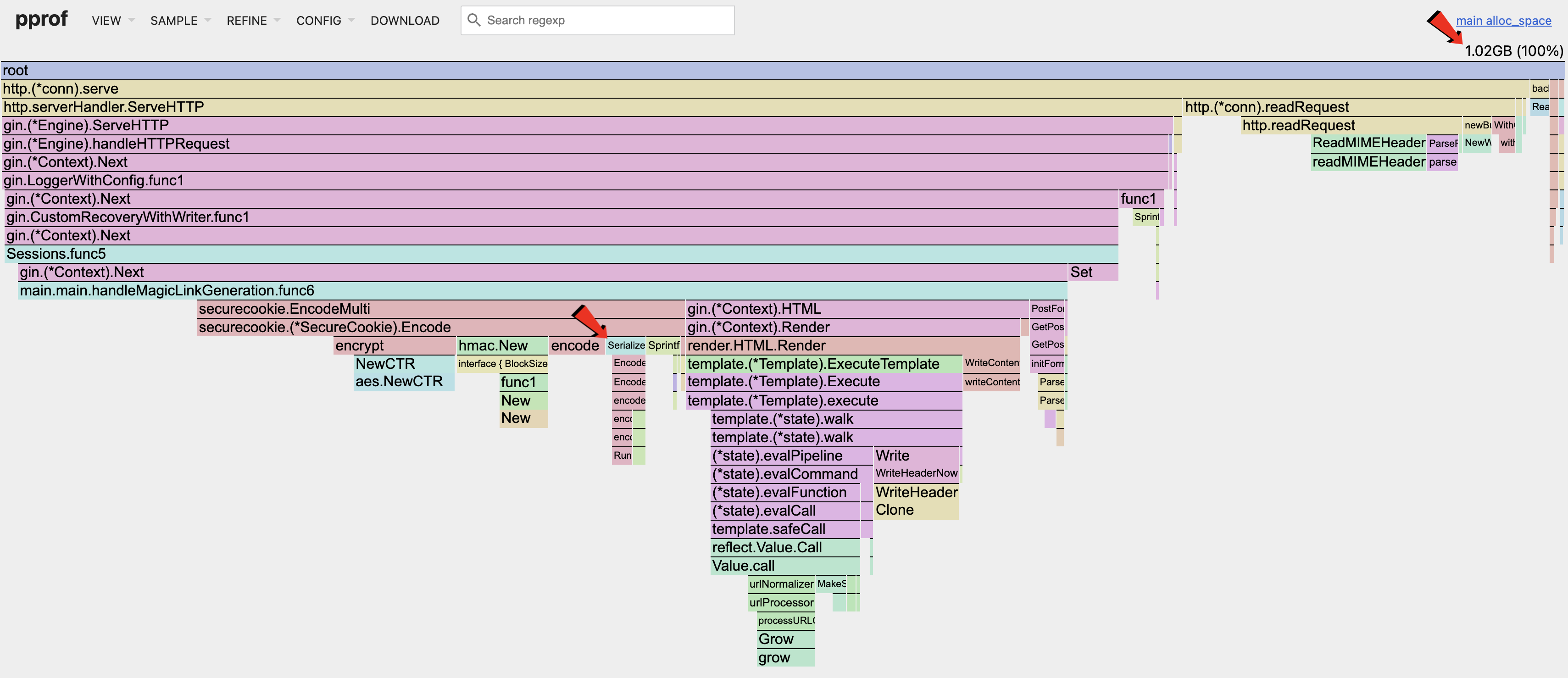

Heap Allocated Space Serializer Optimized

By switching to the new library, we can see the allocated memory drop by ~300MB! That is a significant improvement.

HTML Generation

The default HTML template parses the the file system for template files and uses reflection at runtime to infer what the HTML should be as seen in the flamegraph. This is done for each request and is extremely slow and memory inefficient.

Using the Templ library, these HTML templates are converted to Go code in the build stage, and compiles down to native binary together with the webserver. As a result, rendering a HTML response is extremely fast with minimal memory allocations.

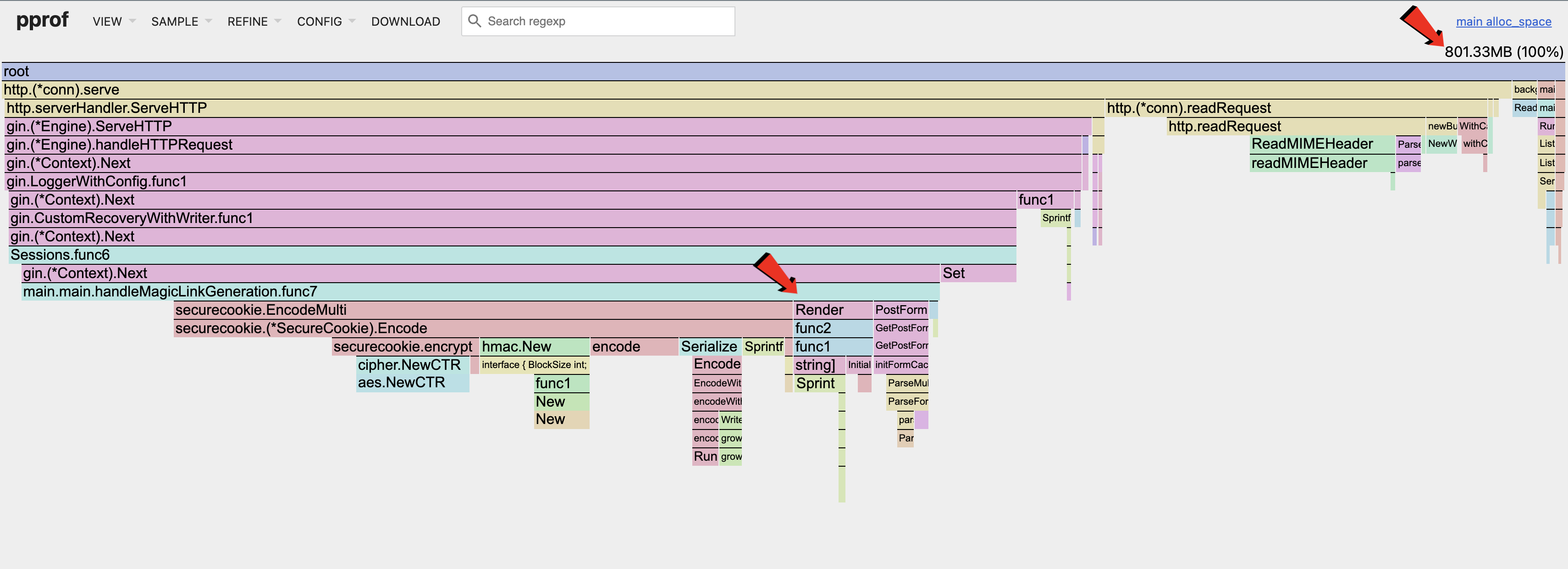

Heap Allocated Space HTML Optimized

By switching to the new library, we can see the allocated memory further drop by ~200MB! That is another significant improvement.

- ← Previous

pprof Analysis - Next →

pprof CPU